Chapter 3 Numerical Taxonomy and Ordination

3.1 Introduction

Nearly every aspect of soil survey involves the question: “Is X more similar to Y or to Z?” The quantification of similarity within a collection of horizons, pedons, components, map units, or even landscapes represents an exciting new way to enhance the precision and accuracy of the day-to-day work of soil scientists. After completing this module, you should be able to quantitatively organize objects based on measured or observed characteristics in a consistent and repeatable manner. Perhaps you will find a solution to the long-standing “similar or dissimilar” question.

3.1.1 Objectives

- Learn essential vocabulary used in the field of numerical taxonomy. Review some of the literature.

- Gain experience with R functions and packages commonly used for clustering and ordination.

- Learn how to create and interpret a distance matrix and appropriate distance metrics.

- Learn how to create and interpret a dendrogram.

- Lean the basics and application of hierarchical clustering methods.

- Lean the basics and application of partitioning clustering methods.

- Learn the basics and application of ordination methods.

- Apply skills to a range of data sources for soils and vegetation.

- Apply techniques from numerical taxonomy to addressing the “similar or dissimilar” question.

- Learn some strategies for coping with missing data.

3.2 Whirlwind Tour

Most of the examples featured in this whirlwind tour are based on soil data from McGahan, D.G., Southard, R.J, Claassen, V.P. 2009. Plant-available calcium varies widely in soils on serpentinite landscapes. Soil Sci. Soc. Am. J. 73: 2087-2095. These data are available in the dataset “sp4” that is built into aqp package for R.

3.2.1 Similarity, Disimilarty, and Distance

There are shelves of books and thousands of academic articles describing the theory and applications of “clustering” and “ordination” methods. This body of knowledge is commonly described as the field of numerical taxonomy (Sneath and Sokal 1973). Central to this field is the quantification of similarity among “individuals” based on a relevant set of “characteristics.” Individuals are typically described as rows of data with a single characteristic per column, together referred to as a data matrix. For example:

| name | clay | sand | Mg | Ca | CEC_7 |

|---|---|---|---|---|---|

| A | 21 | 46 | 25.7 | 9.0 | 23.0 |

| ABt | 27 | 42 | 23.7 | 5.6 | 21.4 |

| Bt1 | 32 | 40 | 23.2 | 1.9 | 23.7 |

| Bt2 | 55 | 27 | 44.3 | 0.3 | 43.0 |

Quantitative measures of similarity are more conveniently expressed as distance, or dissimilarity; in part because of convention and in part because of computational efficiency. In the simplest case, dissimilarity can be computed as the shortest distance between individuals in property-space. Another name for the shortest linear distance between points is the Euclidean distance. Evaluated in two dimensions (between individuals \(p\) and \(q\)), the Euclidean distance is calculated as follows:

\[D(p,q) = \sqrt{(p_{1} - q_{1})^{2} + (p_{2} - q_{2})^{2}}\]

where \(p_{1}\) is the 1st characteristic (or dimension) of individual \(p\).

There are many other ways to define “distance” (e.g. distance metrics), but they will be covered later.

Using the sand and clay percentages from the data above, dissimilarity is represented as the length of the line connecting any two individuals in property space.

The following is a matrix of all pair-wise distances (the distance matrix):

| A | ABt | Bt1 | Bt2 | |

|---|---|---|---|---|

| A | 0.0 | 7.2 | 12.5 | 38.9 |

| ABt | 7.2 | 0.0 | 5.4 | 31.8 |

| Bt1 | 12.5 | 5.4 | 0.0 | 26.4 |

| Bt2 | 38.9 | 31.8 | 26.4 | 0.0 |

Note that this is the full form of the distance matrix. In this form, zeros are on the diagonal (i.e. the distance between an individual and itself is zero) and the upper and lower “triangles” are symmetric. The lower triangle is commonly used by most algorithms to encode pair-wise distances.

| A | ABt | Bt1 | |

|---|---|---|---|

| ABt | 7.2 | ||

| Bt1 | 12.5 | 5.4 | |

| Bt2 | 38.9 | 31.8 | 26.4 |

Interpretation of the matrix is simple: Individual “A” is more like “ABt” than like “Bt1.” It is important to note that quantification of dissimilarity (distance) among individuals is always relative: “X is more like Y, as compared to Z.”

3.2.1.1 Distances You Can See: Perceptual Color Difference

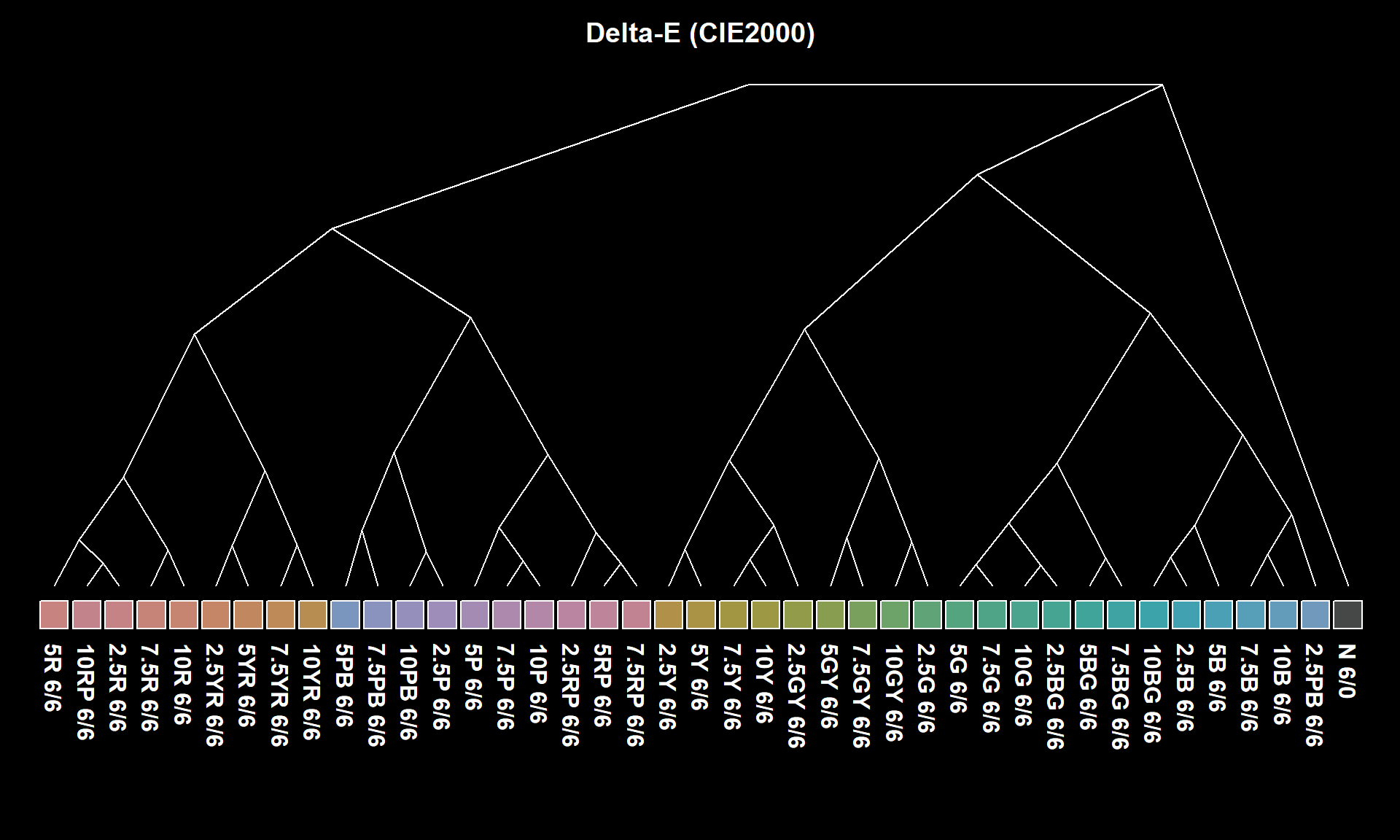

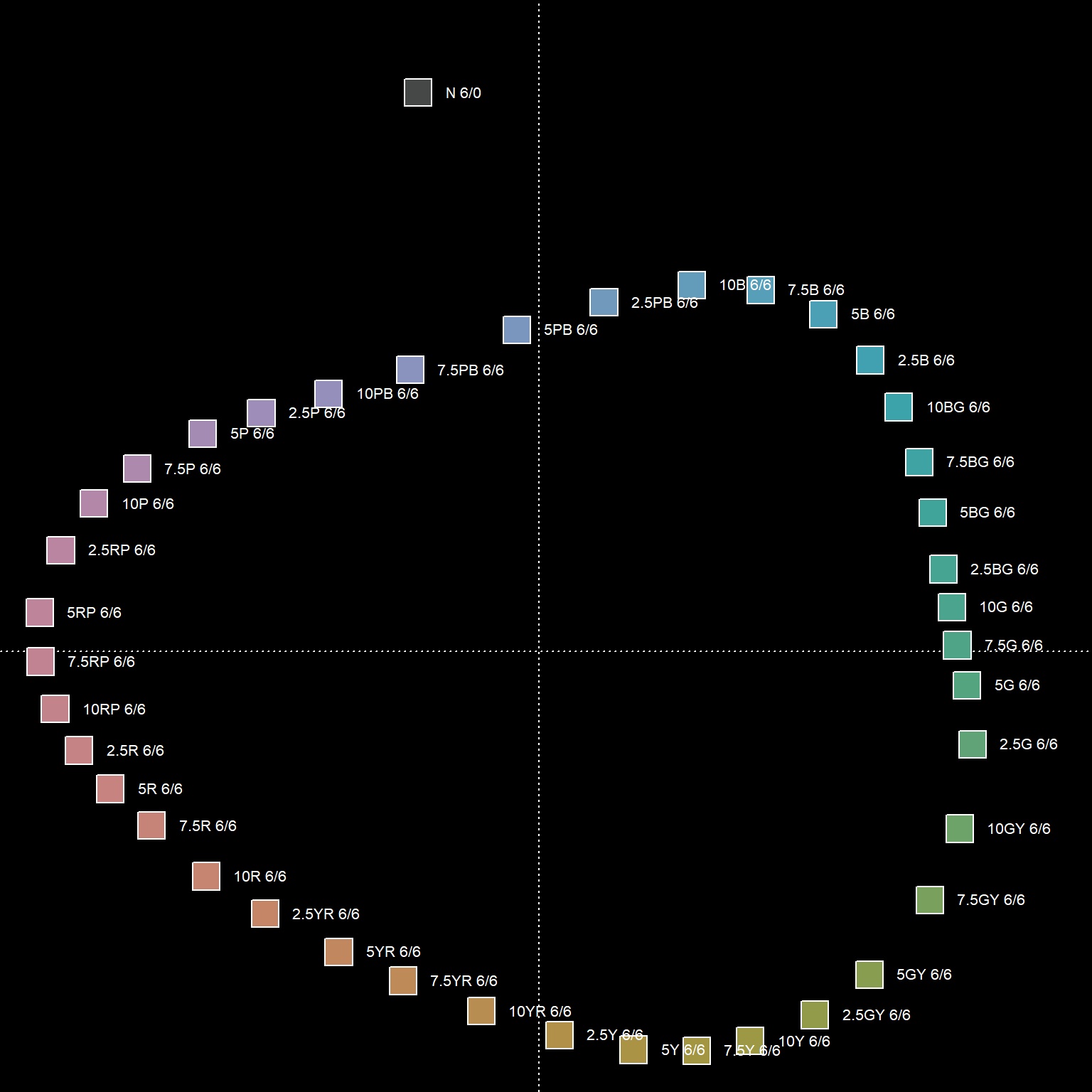

Simulated redoximorphic feature colors, contrast classes and CIE \(\Delta{E_{00}}\). Details here.

3.2.2 Standardization of Characteristics

Euclidean distance doesn’t make much sense if the characteristics do not share a common unit of measure or range of values. Nor is it relevant when some characteristics are categorical and some are continuous. For example, distances are distorted if you compare clay (%) and exchangeable Ca (cmol/kg).

In this example, exchangeable Ca contributes less to the distance between individuals than clay content, effectively down-weighting the importance of the exchangeable Ca. Typically, characteristics are given equal weight (Sneath and Sokal 1973); however, weighting is much simpler to apply after standardization.

Standardization of the data matrix solves the problem of unequal ranges or units of measure, typically by subtraction of the mean and division by standard deviation (z-score transformation).

\[x_{std} = \frac{x - mean(x)}{sd(x)}\]

There are several other standardization methods covered later. The new data matrix looks like the following:

| name | clay | sand | Mg | Ca | CEC_7 |

|---|---|---|---|---|---|

| A | -0.86 | 0.88 | -0.35 | 1.23 | -0.47 |

| ABt | -0.45 | 0.40 | -0.55 | 0.36 | -0.63 |

| Bt1 | -0.12 | 0.15 | -0.60 | -0.59 | -0.40 |

| Bt2 | 1.43 | -1.43 | 1.49 | -1.00 | 1.49 |

Using the standardized data matrix, distances computed in the property space of clay and exchangeable calcium are unbiased by the unique central tendency or spread of each character.

Rarely can the question of “dissimilarity” be answered with only two characteristics (dimensions). Euclidean distance, however, can be extended to an arbitrary number of \(n\) dimensions.

\[D(p,q) = \sqrt{ \sum_{i=1}^{n}{(p_{i} - q_{i})^{2}} }\]

In the equation above, \(i\) is one of \(n\) total characteristics. Imagining what distance “looks like” is difficult if there are more than three dimensions. Instead, examine the distance matrix calculated using all five characteristics.

You can now begin to describe dissimilarity between individuals using an arbitrary number of (relevant) characteristics. You can make statements like “The A horizon is roughly 2x more similar to the ABt horizon than it is to the Bt horizon.” Although this is a trivial example, the utility of generalizing these methods to soil survey operations should be obvious.

3.2.2.1 Review and Discuss

- What are the “data matrix” and “distance matrix”?

- What is standardization, and why is it important?

- What is the largest impediment to creating a distance matrix from NASIS and KSSL data?

- Key point: Each characteristic is its own dimension in property-space.

- Sand, clay, and CEC = 3 dimensions.

- Sand, clay, CEC, OC, and horizon depth = 5 dimensions.

- Multiple dimensions are simple to define in code but are hard to visualize.

- The curse of dimensionality.

3.2.3 Missing Data

Missing data are a fact of life. Soil scientists are quite familiar with missing lab data (“Why didn’t we request optical grain counts?”) or missing essential NASIS pedon data elements, such as horizon bottom depth, estimated clay fraction, or pH. Nearly all of the methods described in this document are very sensitive to missing data. In other words, they won’t work! Following are a couple of possible solutions:

- Fix the missing data if at all possible,

- Estimate the missing data values from know relationships to other properties or a group-wise mean or median, or

- Remove records containing any missing data.

3.2.4 Visualizing Pair-Wise Distances: The Dendrogram

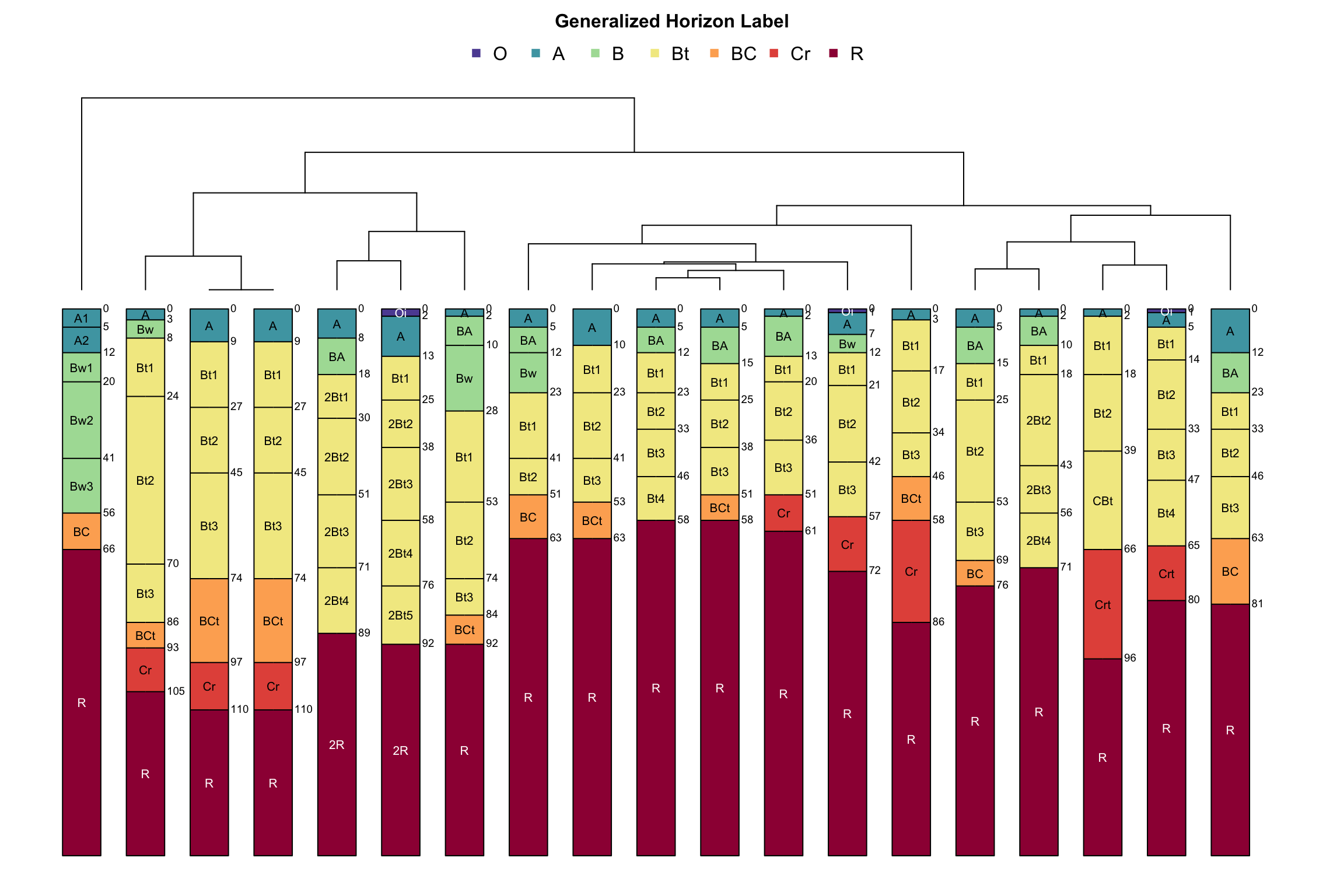

Dendrograms are a convenient approximation of pair-wise distances between individuals (after application of hierarchical grouping criteria; more on this later). Dissimilarity between branches is proportional to the level at which branches merge: branching at higher levels (relative to the root of the tree) suggests greater dissimilarity; branching at lower levels suggests greater similarity. Consider the previous example in which distance between individuals was defined in terms of sand and clay percentages.

Interpretation is simple. Distance in property-space is directly proportional to branching height in the corresponding dendrogram. Visualizing the geometry of pair-wise distances in more than three dimensions is difficult. A dendrogram, however, can conveniently summarize a distance matrix created from an arbitrary number of characteristics (dimensions). It is important to note that some information about pair-wise distances is lost or distorted in the dendrogram. Distortion is least near the terminal “leaves” of the dendrogram. This phenomena is analogous to the distortion generated by a map projection. It is impossible to flatten a higher-dimensional entity to a lower-dimensional form without causing distortion.

3.2.4.1 Re-arranging a Dendrogram for Clarity

The branches of a dendrogram can be rotated like a mobile, so that the final ordering of the terminal “leaves” approximates an alternate ranking. For example, branches of the following dendrogram (right-hand side) have been rotated to approximate the expected hydrologic gradient from summit to toeslope.

This figure is based on component records from SSURGO, arranged using functions from the sharpshootR package, and presented in SoilWeb/SDE.

3.2.5 Cluster Analysis: Finding Groups in Data

Cluster analysis is a massive topic that deals with the seemingly simple task of finding useful groups within a dataset. This topic and the methods used are also referred to as “unsupervised classification” in the fields of remote sensing and GIS. All of the available algorithms will find groups in a given dataset; however, it is up to the subject expert to determine the following:

- Suitable characteristics and standardization method,

- Appropriate clustering algorithm,

- Criteria to determine the “right” number of clusters,

- Limitations of the selected algorithm,

- Interpretation of the final grouping based on subject knowledge, and

- The possibility of needing to start over at step 1.

3.2.5.1 Using Color to Communicate Results of Clustering or Ordination

Note that the widespread use of color in the following examples is not for aesthetic purposes. Colors are convenient for tri-variate data-spaces because you can visually integrate the information into a self-consistent set of classes.

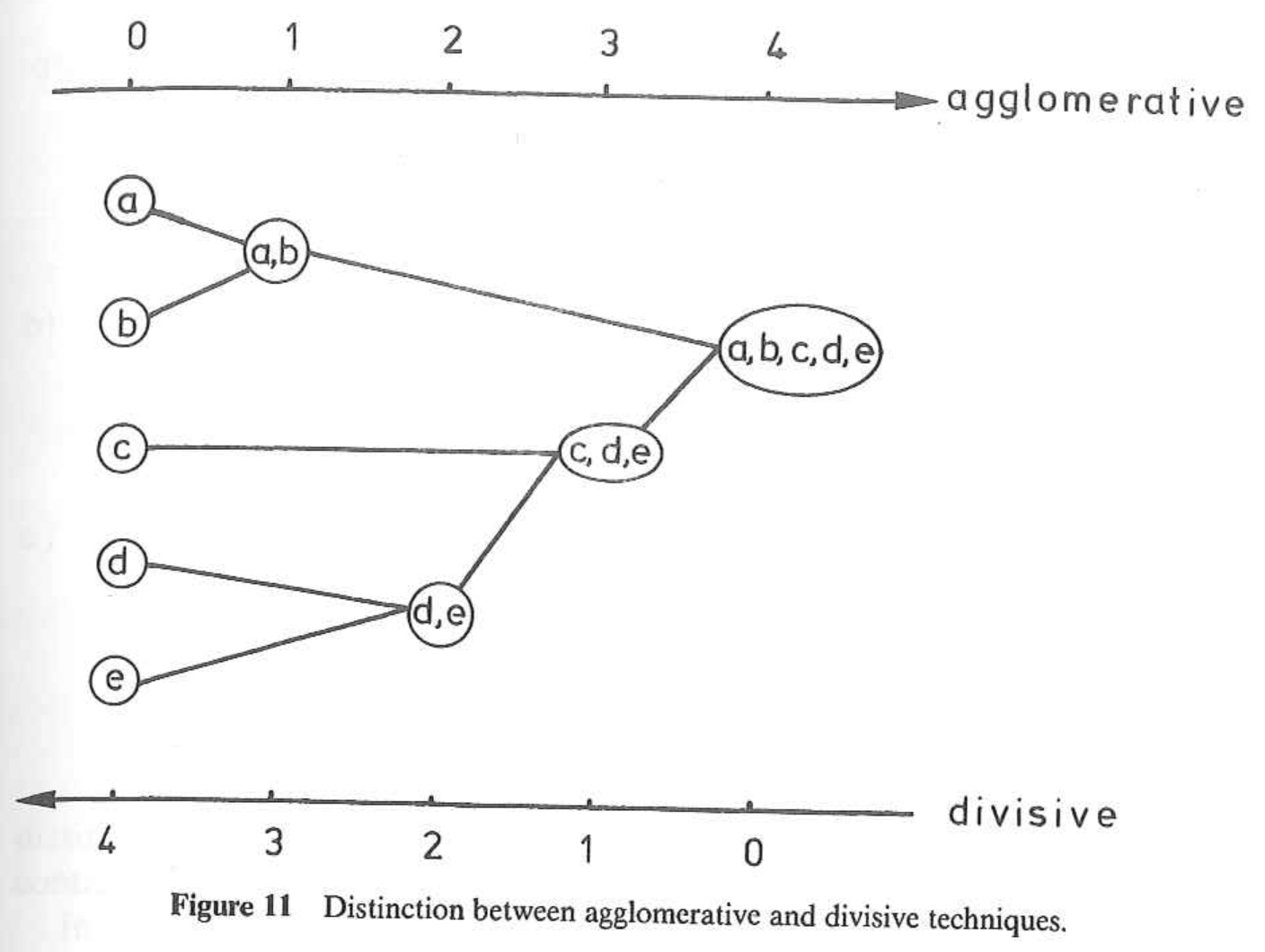

3.2.5.2 Hierarchical Clustering

Hierarchical clustering is useful when a full distance matrix is available and the optimal number of clusters is not yet known. This form of clustering creates a data structure that can encode “grouping” information from one cluster to as many clusters as there are individuals. The expert must determine the optimal place to “cut the tree” and generate a fixed set of clusters. The results from a hierarchical clustering operation are nearly always presented as a dendrogram.

3.2.5.2.1 Methods

There are two main types of hierarchical clustering.

- Agglomerative: Start with individuals and iteratively combine into larger and larger groups.

- Divisive: Start with all individuals and iteratively split into smaller and smaller groups.

Both methods are strongly influenced by the choice of standardization method and distance metric. Both methods require a full, pair-wise distance matrix as input. This requirement can limit the use of hierarchical clustering to datasets that can be fit into memory.

The agglomerative methods also depend on the choice of linkage criterion. Some of these criteria include:

- Average: usually generates spherical clusters, default in

agnes()and recommended by (Kaufman and Rousseeuw 2005), - Single linkage: usually generates elongated clusters,

- Complete linkage: usually generates small, compact clusters,

- Ward’s (minimum variance) method,

- Weighted average (or Gower’s) linkage: spherical clusters of roughly equal size, and

- “Flexible strategy”: adjustable linkage based on parameter \(\alpha\).

- “Flexible UPGMA” (Belbin, Faith, and Milligan 1992): adjustable linkage based on parameter \(\beta\).

See (Kaufman and Rousseeuw 2005), (Arkley 1976), (Legendre and Legendre 2012), and agnes() manual page for a detailed description of these linkage criteria. Selection of linkage criteria can be quantitatively evaluated using the cophenetic correlation; and index of how well a dendrogram preserves the original pair-wise distances. This thread on StackExchange has a nice summary of various linkage criteria. I really like these slides by Brian Tibshirani on the topic of agglomerative method selection. More on this later.

3.2.5.2.2 Review and Discuss

- The simplicity and lack of decisions make the divisive method convenient for most work.

- The top-down approach is similar to the way in which we describe soil morphology and taxonomy.

- Linkage criteria should be selected based on the hypothesized shape of clusters or prior subject knowledge.

- When selecting a method, read/think about it; don’t just go “fishing.”

- Personal opinion: I usually select divisive hierarchical clustering over the other methods: structures seem to be generally more interpretable.

3.2.5.3 Centroid and Medoid (Partitioning) Clustering

Centroid and medoid cluster analyses are commonly referred to as k-means-style analysis. “K-means,” however, is just one of many possible clustering algorithms that partition property-space into a fixed number of groups. These type of algorithms can be applied to very large datasets because they do not rely on the distance matrix. Instead, they are based on an iterative shuffling of group “centroids” until some criterion is minimized, for example, the mean variance within groups.

3.2.5.3.1 Methods

This section describes three (out of many) of the most important partitioning-type algorithms.

- K-means: Groups of individuals are partitioned around newly created “centroids”. Resulting clusters approximately spherical and contain an approximately equal number of individuals.

- K-medoids: Groups of individuals are partitioned around selected “medoids”.

- Fuzzy clustering: Individuals are assigned a “fuzzy membership” value for each partition of property-space.

All of these methods are sensitive to the type of standardization applied to the characteristics. These methods rely on iterative minimization of one or more criteria; therefore, each clustering “run” may generate slightly different output. Most implementations re-run the algorithm until it stabilizes.

3.2.5.3.2 Review and Discuss

- What is the difference between a “medoid” and a “centroid”? Can you think of a way in which both concepts could be applied to the grouping of soils data?

- medoids are tied to individuals, centroids are hypothetical (calculated)

- Fuzzy clustering is also referred to as “soft clustering,” while the other two methods are referred to as “hard clustering.” Sometimes, using both can be helpful, especially if individuals straddle the line between groups.

- Each method has its own strength and weakness, for example here is a nice summary of the limitations of k-means.

3.2.6 Ordination: Visualization in a Reduced Space

Humans are generally quite good at extracting spatial patterns, almost instantly, from two dimensional fields: faces, written language, etc. Sadly, this ability does not extend beyond two or three dimensions. The term ordination refers to a suite of methods that project coordinates in a high-dimensional space into suitable coordinates in a low-dimensional (reduced) space. Map projections are a simple form of ordination: coordinates from the curved surface of the Earth are projected to a two-dimensional plane. As with any projection, there are assumptions, limitations, and distortions. Carl Sagan gives a beautiful demonstration of this concept using shadows, in this excerpt from Cosmos. Here is another excellent demonstration based on handwriting recognition.

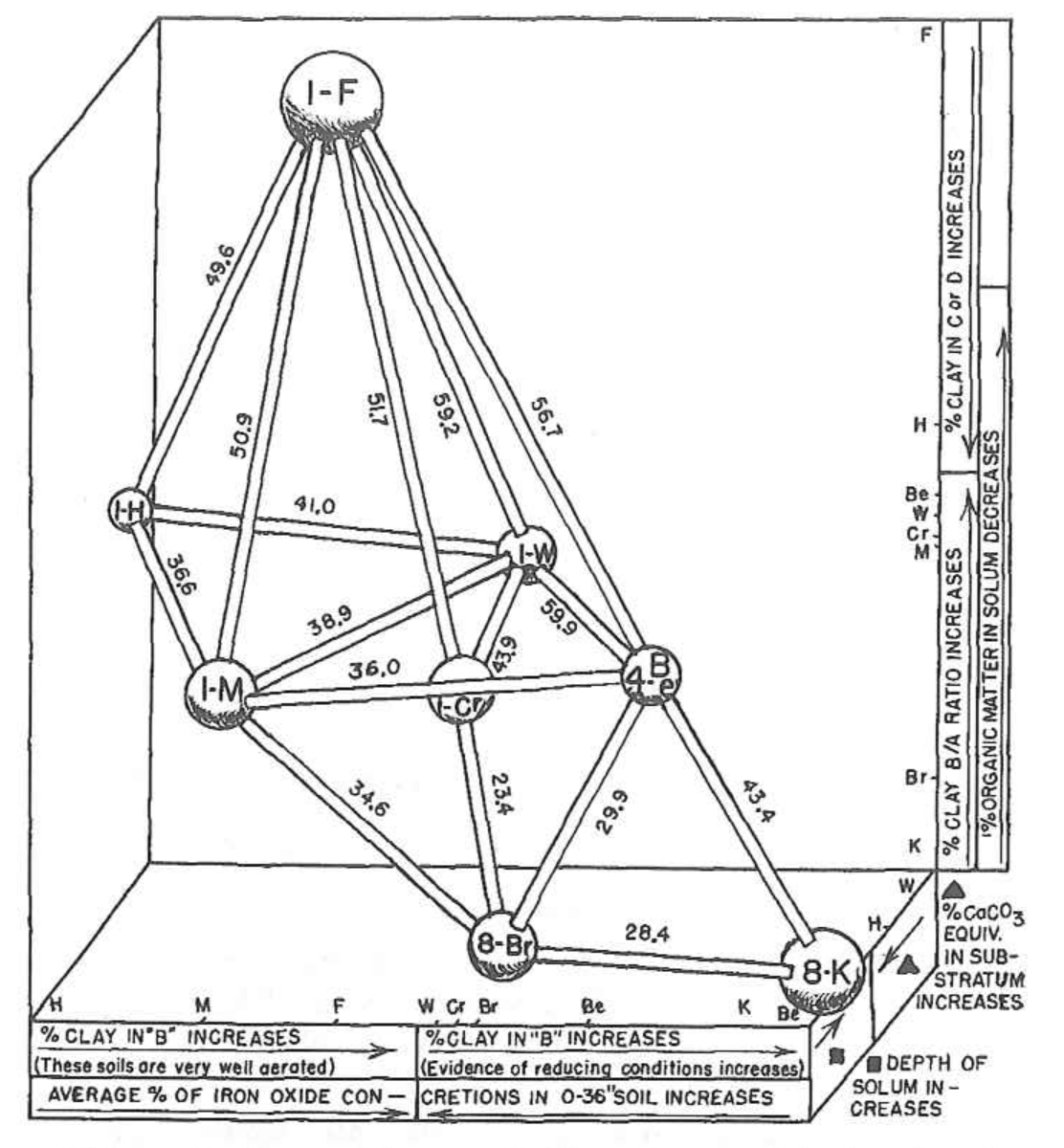

Hole and Hironaka (1960) were some of the first pedologists to apply ordination methods to soils data. The main figure from their classic paper was hand-drawn, based on a physical model (constructed from wooden dowels and spheres!) of the ordination.

Ordination of the distance matrix often reveals details that would otherwise be lost or distorted by a dendrogram representation. The following figures demonstrate how structure within a distance matrix can be lost when presented via dendrogram. Munsell hues (constant value/chroma) are compared using the CIE \(\Delta{E_{00}}\) color contrast metric. The relationship between hues (and neutral color N 6/0) is evident in the ordination.

3.2.6.1 Major Types of Ordination

Of the many possible ordination methods, there are two major types to keep in mind:

Constrained ordination: coordinates in the reduced space are subject to some kind of constraint (more rigid, simple interpretation). Principal component analysis (PCA) is one of the simplest and most widely used ordination methods. The reduced space (“principal components”) are defined by linear combinations of characteristics.

Unconstrained ordination: coordinates in the reduced space are not subject to any kind of constraint (more flexible, less interpretable). “Non-metric” multidimensional scaling (nMDS) attempts to generate a reduced space that minimizes distortion in proportional similarity; i.e., similar individuals are near each other in the reduced space, dissimilar individuals are farther apart. The “non-metric” adjective implies that exact distances are not preserved.

See (Legendre and Legendre 2012) for a comprehensive listing of methods, associated theory, and ecological applications.

3.2.6.2 An Example of nMDS Applied to Soil Data

The following example is based on a data matrix containing lab measurements of clay fraction, sand fraction, exchangeable Ca, exchangeable Mg, and CEC measured by NH4-Ac at pH 7.

| name | clay | sand | Mg | Ca | CEC_7 |

|---|---|---|---|---|---|

| A | -0.41 | 0.21 | 0.06 | 0.44 | -0.23 |

| ABt | 0.04 | -0.07 | -0.06 | -0.13 | -0.38 |

| Bt1 | 0.41 | -0.21 | -0.09 | -0.74 | -0.16 |

| … | … | … | … | … | … |

Note that distances between individuals, within clusters, and between clusters is more apparent in the nMDS representation of the distance matrix. Similar information is embedded in the dendrogram but it is not as intuitive.

3.2.6.2.1 Interpreting the Results

We can conveniently annotate the results of an ordination with contextual information, such as the approximate trends in clay content or CEC. Note that ordination methods represent a flattening of multi-dimensional data space, and in the case of nMDS, preserve proportional (vs. exact) pair-wise distances. Therefore, it is quite common for surfaces (the contours below) fit to the 2D ordination to have complex patterns.

Putting the clustering results into context is important: recall that we are working with individuals that represent genetic horizons that have been clustered according to 5 physical / chemical properties (characteristics). Differences in CEC by NH4-Ac are one of the strongest contributors to the pair-wise distances and overall clustering structure.

3.2.6.3 Review and Discuss

- Which visualization of the distance matrix was simpler to interpret: Dendrogram or ordination by nMDS?

- Do you have any questions about the figures?

- Do you have any guesses on what the clusters represent?

- Evaluating an ordination using “stress” and the Shephard Diagram

3.4 Elaboration with Examples

3.4.1 Set Up the R Session

Install R packages as needed. Open a new R script file to use as you follow along.

3.4.2 Data Sources

Most of the examples used in the following exercises come from these sources:

- Built-in data sets from the

aqpandsoilDBpackages (“sp4,” “gopheridge,” and “loafercreek”). - Results from

fetchNASIS(): pedon data from the local NASIS selected set. - Results from

fetchKSSL(): lab characterization data from the SoilWeb snapshot. - Results from

fetchOSD(): basic morphologic and taxonomic data from the SoilWeb snapshot. - Results from

SDA_query(): live SSURGO spatial and tabular data from Soil Data Access - Data from SSR 2, as CSV, downloaded from class GitHub site

In most cases, you can edit the examples and swap-in just about any data that are in a SoilProfileCollection object. For example, pedons from your local NASIS selected set can be loaded with fetchNASIS().

3.4.3 Try it!

Tinker with some SoilProfileCollection objects.

- Get some data using one of the methods listed above. If you need help, see the manual pages for examples (

?fetchKSSL) or the SoilProfileCollection tutorial. - Determine the number of profiles and horizons within the collection.

- View and extract some site and horizon attributes.

- Generate some soil profile sketches.

3.4.4 Evaluating Missing Data

The aqp package provides two functions for checking the fraction of missing data within a SoilProfileCollection object. The first function (evalMissingData) generates an index that ranges from 0 (all missing) to 1 (all present) for each profile. This index can be used to subset or rank profiles for further investigation. The second function (missingDataGrid) creates a visualization of the fraction of data missing within each horizon. Both functions can optionally filter-out horizons that don’t typically have data, for example Cr, Cd, and R horizons.

The new Missing Data vignette covers recent changes to the evalMissingData() function in aqp.

The following examples are based on the gopheridge sample dataset.

evalMissingData

# example data

data("gopheridge", package = "soilDB")

# compute data completeness

gopheridge$data.complete <- evalMissingData(

gopheridge,

vars = c('clay', 'sand', 'phfield'),

name = 'hzname',

p = 'Cr|R|Cd'

)

# check range in missing data

summary(gopheridge$data.complete)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 0.0000 0.0000 0.2143 0.4672 1.0000 1.0000# rank

new.order <- order(gopheridge$data.complete)

# plot along data completeness ranking

par(mar = c(3, 0, 1, 1))

plotSPC(gopheridge, plot.order = new.order, print.id = FALSE, name = '', max.depth = 100, width = 0.33)

# add axis, note re-ordering of axis labels

axis(side=1, at=1:length(gopheridge), labels = round(gopheridge$data.complete[new.order], 2),

line = 0, cex.axis=0.65, las=2)

title('Gopheridge pedons sorted according to data completeness (clay, sand, pH)')missingDataGrid

# view missing data as a fraction

res <- missingDataGrid(gopheridge, max_depth=100, vars=c('clay', 'sand', 'phfield'), filter.column='hzname', filter.regex = 'Cr|R|Cd', main='Fraction of missing data (clay, sand, pH)', cols = hcl.colors(10))

# plot figure

print(res$fig)## peiid clay sand phfield

## 1 1137354 0 100 100

## 2 1147151 0 0 100

## 3 1147190 0 0 100

## 4 242808 29 29 0

## 5 252851 29 29 29

## 6 268791 0 0 0For now, extract those profiles that have a complete set of field-described clay, sand, or pH values for later use.

# be sure to read the manual page for this function

gopheridge.complete <- subset(gopheridge, data.complete > 0.99)

# another way

idx <- which(gopheridge$data.complete > 0.99)

gopheridge.complete <- gopheridge[idx, ]

# looks good

par(mar=c(0,0,3,1))

plot(gopheridge.complete, color='clay', id.style='side', label='pedon_id')3.4.5 More on the Distance Matrix and How to Make One

The following three functions are essential to the creation of a distance matrix:

dist(): This function is in base R, is simple and fast, and has a limited number of distance metrics.daisy(): This function is in cluster package, has a better selection of distance metrics, and has simple standardization. Much more convenient thandist().vegdist(): This function is in vegan package, has many distance metrics, and is primarily designed for species composition data.

The following is a short demonstration:

# get some example data from the aqp package

data('sp4', package = 'aqp')

# subset select rows and columns

sp4 <- sp4[1:4, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

row.names(sp4) <- sp4$name

# compare distance functions

# Euclidean distance, no standardization

round(dist(sp4[, -1], method = 'euclidean'))## A ABt Bt1

## ABt 8

## Bt1 15 7

## Bt2 48 44 39# Euclidean distance, standardization

round(daisy(sp4[, -1], stand = TRUE, metric = 'euclidean'), 2)## Dissimilarities :

## A ABt Bt1

## ABt 1.45

## Bt1 2.73 1.36

## Bt2 6.45 5.65 4.91

##

## Metric : euclidean

## Number of objects : 4# Gower's generalized distance metric (includes standardization)

round(vegdist(sp4[, -1], method = 'gower'), 2)## A ABt Bt1

## ABt 0.19

## Bt1 0.32 0.16

## Bt2 0.96 0.84 0.693.4.5.1 Distance Calculations with Categorical Data

The following example is excerpted from “A Novel Display of Categorical Pedon Data”. This example illustrates an application of clustering binary data (presence or absence of a diagnostic feature). Internally, the diagnosticPropertyPlot() function uses the daisy() function to compute pair-wise distances using the “general dissimilarity coefficient” of Gower (Gower 1971). A concise summary of this distance metric is in (Kaufman and Rousseeuw 2005).

# load some example NASIS data

data(loafercreek, package='soilDB')

hzdesgnname(loafercreek) <- 'hzname'

# cut-down to a subset, first 20 pedons

loafercreek <- loafercreek[1:20, ]

# get depth class

sdc <- getSoilDepthClass(loafercreek)

site(loafercreek) <- sdc

# diagnostic properties to consider, no need to convert to factors

v <- c('lithic.contact', 'paralithic.contact', 'argillic.horizon',

'cambic.horizon', 'ochric.epipedon', 'mollic.epipedon', 'very.shallow',

'shallow', 'mod.deep', 'deep', 'very.deep')

# do the analysis and save the results to object 'x'

x <- diagnosticPropertyPlot(loafercreek, v, k=5, grid.label='bedrckkind', dend.label = 'taxonname')If you are wondering what is in the object x, the str() function or manual page (?diagnosticPropertyPlot) can help.

3.4.6 Thematic Distance Matrix Plots

Sometimes it is useful visualize the entire distance matrix as a thematic map. The coorplot() function from the corrplot package is useful here.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

sp4.scaled <- data.frame(name=sp4[, 1], round(scale( sp4[, -1]), 2))

# distance matrix

d <- dist(sp4.scaled[, -1])

# full-matrix representation

m <- as.matrix(d)

# note: setting is.corr = FALSE

corrplot(

m,

col = hcl.colors(25, palette = 'mako'),

is.corr = FALSE,

col.lim = range(m),

method = "color",

order = "original",

type = "upper",

diag = FALSE,

tl.cex = 0.66,

tl.col = 'black',

mar = c(0.1, 0, 0, 0.8),

addgrid = TRUE

) 3.4.7 Hierachrical Clustering

The go-to functions for hierarchical clustering are as follows:

hclust(): This function is agglomerative, is in base R, requires a distance matrix, and implements most of the commonly used linkage criteria.agnes(): This function is agglomerative, is inclusterpackage, can perform standardization and distance calculations, and implements more linkage criteria.diana(): This function is divisive, is inclusterpackage, can perform standardization and distance calculations.

3.4.7.1 Basic Agglomerative Hierarchical Clustering with hclust

The hclust() function and resulting hclust-class objects are simple to use, but limited.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

# distance matrix

d <- daisy(sp4[, -1], metric = 'euclidean', stand = TRUE)

# hierachical clustering with base function hclust

sp4.h <- hclust(d, method = 'ward.D')

sp4.h$labels <- sp4$name

# plot with basic plotting method... not many options here

par(mar=c(2,4,2,2))

plot(sp4.h, font=2, cex=0.85)

# ID clusters after cutting tree

rect.hclust(sp4.h, 4)3.4.7.2 Better Plots via ape Package

This example uses a different approach to plotting based on functions and classes from the ape package.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

# distance matrix

d <- daisy(sp4[, -1], metric = 'euclidean', stand = TRUE)

# divising clustering

dd <- diana(d)

# convert to ape class, via hclust class

h <- as.hclust(dd)

h$labels <- sp4$name

p <- as.phylo(h)

# define some nice colors

col.set <- brewer.pal(9, 'Set1')

# cut tree into 4 groups

groups <- cutree(h, 4)

# make color vector based on groups

cols <- col.set[groups]The plot methods for phylo class objects are quite flexible. Be sure to see the manual page ?plot.phylo.

par(mar=c(1,1,1,1), mfcol=c(2,2))

plot(p, label.offset=0.125, direction='right', font=1, cex=0.85, main='dendrogram')

tiplabels(pch=15, col=cols)

plot(p, type='radial', font=1, cex=0.85, main='radial')

tiplabels(pch=15, col=cols)

plot(p, type='fan', font=1, cex=0.85, main='fan')

tiplabels(pch=15, col=cols)

plot(p, type='unrooted', font=1, cex=0.85, main='unrooted')

tiplabels(pch=15, col=cols)3.4.7.3 Evaluation of Dendrogram Representation

Re-visiting our sample data from before, develop hierarchical clusterings using several strategies (methods / linkage criteria).

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

# distance matrix

d <- daisy(sp4[, -1], metric = 'euclidean', stand = TRUE)

# hierarchical clustering based on several strategies

# agglomerative

h.avg <- agnes(d, method='average')

h.single <- agnes(d, method='single')

h.complete <- agnes(d, method='complete')

h.ward <- agnes(d, method='ward')

h.flexible <- agnes(d, method='gaverage', par.method = 0.01)

# divisive

h.div <- diana(d)The correlation between original distance matrix and cophenetic distance matrix is a reasonable index of how faithfully a dendrogram preserves the original pair-wise distances.

# agglomerative hierarchical clustering with various linkage criteria

corr.avg <- cor(d, cophenetic(h.avg))

corr.single <- cor(d, cophenetic(h.single))

corr.complete <- cor(d, cophenetic(h.complete))

corr.ward <- cor(d, cophenetic(h.ward))

corr.flexible <- cor(d, cophenetic(h.flexible))

# divisive hierarchical clustering

corr.div <- cor(d, cophenetic(h.div))Combine the results into a table for quick comparison. Note that the agnes and diana functions provide an agglomerative / divisive coefficient that can be used to evaluate clustering efficiency (e.g. cluster size and “compactness”). Depending on the application, one metric may be more informative than the other.

res <- data.frame(

method=c('average', 'single', 'complete', 'ward', 'flexible UPGMA', 'divisive'),

cophenetic.correlation=c(corr.avg, corr.single, corr.complete, corr.ward, corr.flexible, corr.div),

agg_div.coefficient=c(h.avg$ac, h.single$ac, h.complete$ac, h.ward$ac, h.flexible$ac, h.div$dc)

)

# re-order

res <- res[order(res$cophenetic.correlation, decreasing = TRUE), ]| method | cophenetic.correlation | agg_div.coefficient |

|---|---|---|

| average | 0.840 | 0.787 |

| flexible UPGMA | 0.840 | 0.778 |

| single | 0.784 | 0.613 |

| complete | 0.759 | 0.879 |

| ward | 0.756 | 0.894 |

| divisive | 0.703 | 0.874 |

Investigate differences graphically: I’ve ranked according to the frequency withwhich I use the various methods.

par(mar=c(0,0.25,1.5,0.25), mfrow=c(2,3))

plot(as.phylo(as.hclust(h.div)), label.offset=0.125, direction='right', font=1, cex=0.75, main='1. Divisive', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.div, 4)], cex = 1.5)

plot(as.phylo(as.hclust(h.ward)), label.offset=0.125, direction='right', font=1, cex=0.75, main='2. Ward', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.ward, 4)], cex = 1.5)

plot(as.phylo(as.hclust(h.flexible)), label.offset=0.125, direction='right', font=1, cex=0.75, main='3. Flexible (0.01)', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.flexible, 4)], cex = 1.5)

plot(as.phylo(as.hclust(h.avg)), label.offset=0.125, direction='right', font=1, cex=0.75, main='4. Average', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.avg, 4)], cex = 1.5)

plot(as.phylo(as.hclust(h.single)), label.offset=0.125, direction='right', font=1, cex=0.75, main='(never) Single', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.single, 4)], cex = 1.5)

plot(as.phylo(as.hclust(h.complete)), label.offset=0.125, direction='right', font=1, cex=0.75, main='(never) Complete', cex.main = 1.75)

tiplabels(pch=15, col=col.set[cutree(h.complete, 4)], cex = 1.5)3.4.7.3.1 More on the Flexible UPGMA method

Test the “flexible UPGMA” method (Belbin, Faith, and Milligan 1992) by iterating over possible values for \(\beta\). Looks like a value near 0.01 would be ideal (e.g. highest cophenetic correlation). Interestingly, this is very close to the cophenetic correlation associated with the “average” linkage criteria.

# init a sequence spanning -1 to 1

beta <- seq(from=-1, to=1, length.out = 100)

# init an empty vector to store results

r <- vector(mode='numeric', length = length(beta))

# iterate over values of beta and compute cophenetic correlation

for(i in 1:length(r)) {

r[i] <- cor(d, cophenetic(agnes(d, method='gaverage', par.method = beta[i])))

}

# plot

par(mar=c(5,5,1,1))

plot(beta, r, type='l', xlab='beta parameter', ylab='cophenetic correlation', pch=16, las=1)

# locate the max coph. corr.

idx <- which.max(r)

# mark this point and label

points(beta[idx], r[idx], col='red', cex=2, lwd=2)

text(beta[idx], r[idx], labels = round(beta[idx], 3), pos=4)3.4.8 Centroid and Medoid (Partitioning) Clustering

The following creates simulated data for demonstration purposes, representing two populations:

- mean = 0, sd = 0.3

- mean = 1, sd = 0.3

# nice colors for later

col.set <- brewer.pal(9, 'Set1')

# 2D example

x <- rbind(

matrix(rnorm(100, mean = 0, sd = 0.3), ncol = 2),

matrix(rnorm(100, mean = 1, sd = 0.3), ncol = 2)

)

colnames(x) <- c("x", "y")3.4.8.1 Hard Classes

3.4.8.1.1 K-Means

It is important to note that the k-means algorithm is sensitive to the initial selection of centroid locations (typically random). The default behavior of the kmeans() function does not attempt to correct for this limitation. Note that cluster assignment and centroid vary across runs (panels in the figure below).

par(mfrow=c(3,3), mar=c(1,1,1,1))

for(i in 1:9) {

cl <- kmeans(x, centers=3)

plot(x, col = col.set[cl$cluster], axes=FALSE)

grid()

points(cl$centers, col = col.set, pch = 8, cex = 2, lwd=2)

box()

}Setting the nstart argument (“number of random starts”) to a value great than 1 (10 is ideal) will ensure that the final clustering configuration will remain stable between runs. Note that the cluster ID (color) will vary between runs, however, with nstart=10 the overall configuration remains the same.

par(mfrow=c(3,3), mar=c(1,1,1,1))

for(i in 1:9) {

cl <- kmeans(x, centers=3, nstart = 10, iter.max = 100)

plot(x, col = col.set[cl$cluster], axes=FALSE)

grid()

points(cl$centers, col = col.set, pch = 8, cex = 2, lwd=2)

box()

}3.4.8.1.2 K-Medoids

The cluster package provides two interfaces to the k-medoids algorithm:

pam(): small to medium sized data setsclara(): optmized for larger data sets

A quick example of using pam() to identify an increasing number of clusters.

par(mfrow=c(2,3), mar=c(1,1,1,1))

for(i in 2:7) {

cl <- pam(x, k = i, stand = TRUE)

plot(x, col = col.set[cl$clustering], axes=FALSE)

grid()

points(cl$medoids, col = col.set, pch = 0, cex = 2, lwd=2)

box()

}3.4.8.2 Fuzzy Clusters

Clustering results are in the form of class membership; values ranging between 0 and 1. This means that group membership is a continuum vs. the “hard” classes assigned by k-means or k-medoids. The “mixture” of class membership in the example below is conveniently expressed using proportions of red, green, and blue (sRGB color coordinates).

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4.std <- data.frame(sp4[, c('id', 'name', 'top', 'bottom')], scale( sp4[, c('Mg', 'Ca')]))

# perform fuzzy clustering

cl <- fanny(sp4.std[, c('Mg', 'Ca')], k = 3, stand = FALSE)

# get membership matrix

m <- cl$membership

# convert to colors by interpreting membership as sRGB proportions

cols <- rgb(m)

# use Shannon H to set symbol size

H <- apply(m, 1, shannonEntropy)

# larger H -> smaller symbols

pt.cex <- (1/H) * 2.5

# setup plot

par(mar=c(4, 4, 3, 0.5))

plot(sp4.std$Mg, sp4.std$Ca, asp=1, ylab='Exchangeable Mg (cmol/kg), Standardized', xlab='Exchangeable Ca (cmol/kg), Standardized', type='n')

abline(h=0, v=0, col='black')

grid()

# add original obs

points(sp4.std$Mg, sp4.std$Ca, bg=cols, col='black', cex = pt.cex, pch = 21)

# annotate with Dunn's fuzzy coeff

mtext(sprintf("Dunn's partition coefficient: %s", round(cl$coeff[1], 2)), line = 1, cex = 1.5)Save the RGB color representation of cluster membership to the source data.frame and convert to SoilProfileCollection.

sp4.std$colors <- cols

depths(sp4.std) <- id ~ top + bottom

par(mar=c(0, 0, 0, 0))

plot(sp4.std, color='colors', cex.names=0.75)

title('Fuzzy Clustering Results in Context', line=-1)3.4.8.3 How Many Clusters?

There is no simple answer to the question “How many clusters are in my data?” Some metrics, however, can be used to help estimate a “reasonable” number of clusters. The mean silhouette width is a useful index of “cluster compactness” relative to neighbor clusters (Rousseeuw 1987). Larger silhouette widths suggest tighter grouping.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4.std <- data.frame(sp4[, c('id', 'name', 'top', 'bottom')], scale( sp4[, c('Mg', 'Ca')]))

# perform hard clustering

sil.widths <- vector(mode='numeric')

for(i in 2:10) {

cl <- pam(sp4.std[, c('Mg', 'Ca')], k = i, stand = FALSE)

sil.widths[i] <- cl$silinfo$avg.width

}

par(mar=c(4,4,3,1))

plot(sil.widths, type='b', xlab='Number of Clusters', ylab='Average Silhouette Width', las=1, lwd=2, col='RoyalBlue', cex=1.25, main='Finding the "Right" Number of Clusters')

grid()

According to this metric, it looks like 3 clusters is reasonable. Again, this is a judgement call–most decisions related to clustering algorithm selection and the “optimal number of clusters” are somewhat subjective.

# perform fuzzy clustering

cl <- pam(sp4.std[, c('Mg', 'Ca')], k = 3, stand = FALSE)

# setup plot

par(mar=c(4,4,0,0))

plot(sp4.std$Mg, sp4.std$Ca, asp=1, ylab='Exchangeable Mg (cmol/kg), Standardized', xlab='Exchangeable Ca (cmol/kg), Standardized', type='n')

abline(h=0, v=0, col='black')

grid()

# add original obs

points(sp4.std$Mg, sp4.std$Ca, bg=cl$clustering, col='black', cex=1.25, pch=21)3.4.9 Ordination

3.4.9.1 Principal Component Analysis

A simple, constrained ordination based on variance. This method does not use the distance matrix, rather it seeks to find a new set of axes that describe maximum variance via linear combinations of characteristics. Standardization is essential.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

sp4.scaled <- data.frame(name=sp4[, 1], round(scale( sp4[, -1]), 2))

# PCA

# note that we are leaving out the first column: the horizon names

# note the syntax used to extract the principal components

# note that PCA doesn't use the distance matrix

pca <- predict(princomp(sp4.scaled[, -1]))

## perform clustering to highlight structure in the PCA

# distance matrix

d <- dist(sp4.scaled[, -1])

m <- as.matrix(d)

dimnames(m) <- list(sp4.scaled$name, sp4.scaled$name)

d <- as.dist(m)

# dendrogram from divisive clustering

dd <- diana(d)

h <- as.hclust(dd)

p <- as.phylo(h)

# define colors based on cutting a divisive hierarchical clustering into 4 groups

cols <- brewer.pal(9, 'Set1')[cutree(h, 4)]

# plot first 2 PC

plot(pca[, 1:2], asp=1, type='n', axes=FALSE, xlab='', ylab='', main="Principal Components 1 and 2")

grid()

text(pca[, 1:2], sp4.scaled$name, cex=0.75, col=cols, font=2)

box()3.4.9.3 Sammon’s Non-linear Mapping

Simple interface to nMDS, input is a distance matrix. Note that this algorithm will fail if there are 0’s or ties within the distance matrix. See ?sammon for details.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

sp4.scaled <- data.frame(name=sp4[, 1], round(scale( sp4[, -1]), 2))

# distance matrix

d <- dist(sp4.scaled[, -1])

m <- as.matrix(d)

dimnames(m) <- list(sp4.scaled$name, sp4.scaled$name)

d <- as.dist(m)

# dendrogram from divisive clustering

dd <- diana(d)

h <- as.hclust(dd)

p <- as.phylo(h)

# define colors based on cutting a divisive hierarchical clustering into 4 groups

cols <- brewer.pal(9, 'Set1')[cutree(h, 4)]

# nMDS from distance matrix

s <- sammon(d)

# plot

par(mar=c(3,1,3,1))

plot(s$points, asp=1, type='n', axes=FALSE, xlab='', ylab='', main="nMDS by Sammon's Non-Linear Mapping")

# abline(v=0, h=0, col='black')

grid()

text(s$points, rownames(s$points), cex=0.75, col=cols, font=2)

box()3.4.9.4 nMDS with the vegan Package

The following example is quite brief. See the Introduction to ordination in vegan vignette for some excellent worked examples and ecological interpretation. The vegan package FAQ is another excellent resource. Numerical Ecology with R can be used as both reference and learning resource.

The metaMDS() function from the vegan package provides a convenience function that automates most of the steps required to create an oridination.

# re-make data, this time with all profiles

data('sp4', package = 'aqp')

sp4 <- sp4[, c('name', 'clay', 'sand', 'Mg', 'Ca', 'CEC_7')]

sp4.scaled <- data.frame(name=sp4[, 1], round(scale( sp4[, -1]), 2))

# define colors based on natural groupings

cols <- brewer.pal(9, 'Set1')

# distance calc + ordination

s <- metaMDS(sp4.scaled[, -1], distance = 'gower', autotransform = FALSE, wascores=FALSE)

## this is used to generate 4 classes from a divisive hierarchical clustering

# manually compute distance matrix

d <- dist(sp4.scaled[, -1])

m <- as.matrix(d)

dimnames(m) <- list(sp4.scaled$name, sp4.scaled$name)

d <- as.dist(m)

# dendrogram from divisive clustering

dd <- diana(d)

h <- as.hclust(dd)

# define colors based on cutting a divisive hierarchical clustering into 4 groups

cols <- brewer.pal(9, 'Set1')[cutree(h, 4)]

# plot ordination

par(mar=c(3,1,3,1))

fig <- ordiplot(s, type='none', cex.axis=0.75, axes=FALSE, xlab='', ylab='', main='nMDS by metaMDS()')

abline(h=0, v=0, lty=2, col='grey')

text(fig$sites, sp4$name, cex=0.75, font=2, col=cols)

box()It is also possible to annotate an ordination using information from a hierarchical clustering via vegan::ordicluster.

par(mfcol = c(1, 2), mar=c(3,1,3,1))

fig <- ordiplot(s, type='none', cex.axis=0.75, axes=FALSE, xlab='', ylab='', main='nMDS by metaMDS()')

abline(h=0, v=0, lty=2, col='grey')

text(fig$sites, sp4$name, cex=0.75, font=2, col=cols)

# annotate with hierarchical clustering

ordicluster(fig, h, prune=3, col = "black")

box()

p <- as.phylo(h)

plot(p, cex = 0.66, label.offset = 0.5)

tiplabels(, pch = 15, col = cols)Goodness of nMDS fit at each point (scaled and sqrt-transformed). Smaller symbols suggest better fit.

pt.cex <- sqrt(goodness(s) * 50)

par(mar=c(3,1,3,1))

fig <- ordiplot(s, type='none', cex.axis=0.75, axes=FALSE, xlab='', ylab='', main='nMDS by metaMDS()')

abline(h=0, v=0, lty=2, col='grey')

text(fig$sites, sp4$name, font=2, col=cols, cex = pt.cex)

# ordicluster(fig, agnes(daisy(sp4.scaled[, -1]), method='ward'), prune=3, col = "orange")

box()The Shepard diagram (stress plot) is used to assess the quality of a nMDS ordination. There should be as little scatter as possible in this diagram.

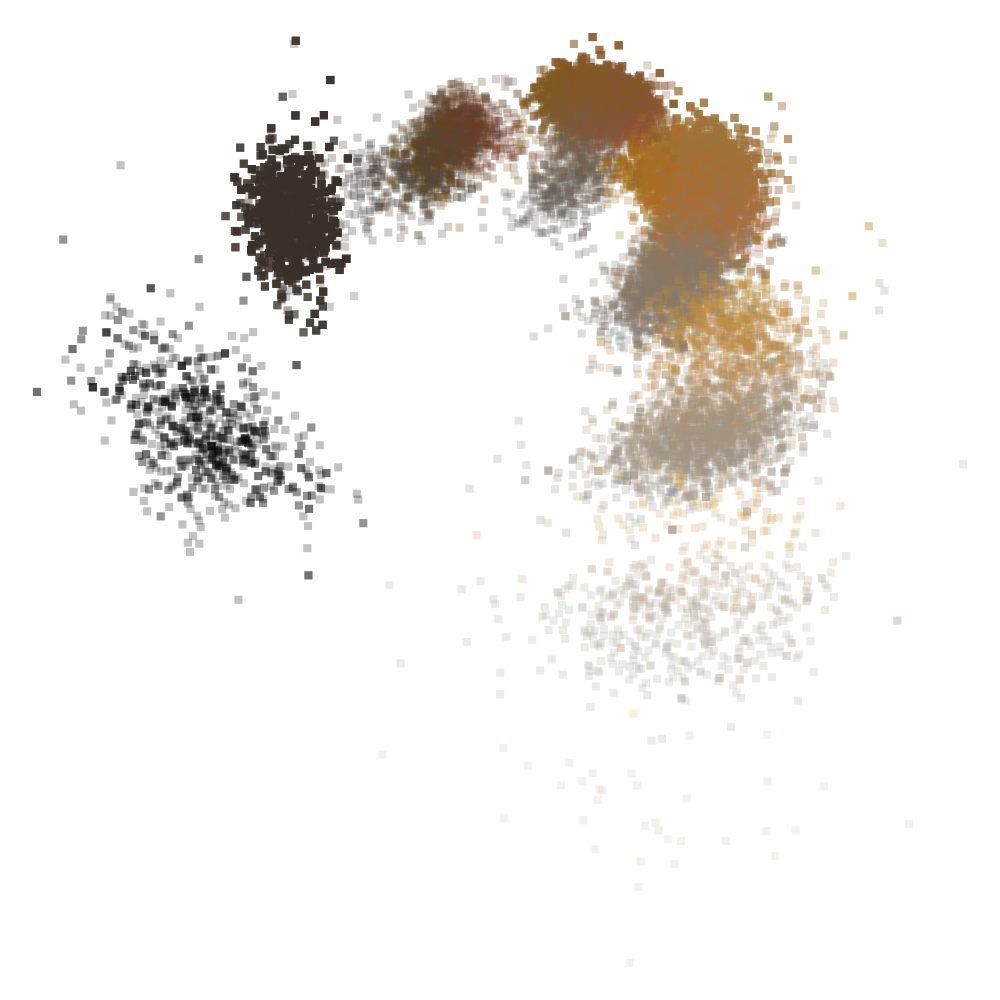

3.4.9.5 nMDS of Very Large Data

Ordination by nMDS of very large data (> 1,000 records) requires special methods.

https://cran.r-project.org/web/packages/bigmds/bigmds.pdf

nMDS representation of all RMF (moist) colors described in NASIS.

3.5 Practical Applications

Before you work through the following examples, you should review the SoilProfileCollection object tutorial.

3.5.1 Pair-Wise Distances Between Soil Profiles

# init example data

data(sp4)

depths(sp4) <- id ~ top + bottom

# eval dissimilarity:

# using Ex-Ca:Mg and CEC at pH 7

# with no depth-weighting (k=0)

# to a maximum depth of 40 cm

d <- NCSP(sp4, vars=c('ex_Ca_to_Mg', 'CEC_7'), k = 0, maxDepth = 40)

# check distance matrix:

round(d, 1)## colusa glenn kings mariposa mendocino napa san benito shasta

## glenn 13.8

## kings 16.0 13.1

## mariposa 8.4 11.6 16.5

## mendocino 12.1 8.2 17.0 15.5

## napa 31.5 24.9 30.5 30.3 22.2

## san benito 26.8 21.4 27.4 29.3 16.4 18.0

## shasta 17.2 13.6 8.7 17.6 17.6 34.8 23.3

## shasta-trinity 6.4 16.9 22.3 9.6 17.0 30.9 28.3 23.3

## tehama 30.1 23.9 29.2 28.6 20.8 9.0 15.3 32.7

## shasta-trinity

## glenn

## kings

## mariposa

## mendocino

## napa

## san benito

## shasta

## shasta-trinity

## tehama 29.2# visualize dissimilarity matrix via hierarchical clustering

par(mar=c(0,0,3,1))

plotProfileDendrogram(sp4, clust, dend.y.scale = 50, scaling.factor = 0.66, y.offset = 3.5, width=0.3, cex.names = 0.7, color='ex_Ca_to_Mg', col.label='Exchageable Ca to Mg Ratio', name.style = 'center-center')3.5.2 Pair-Wise Distances Between Soil Series

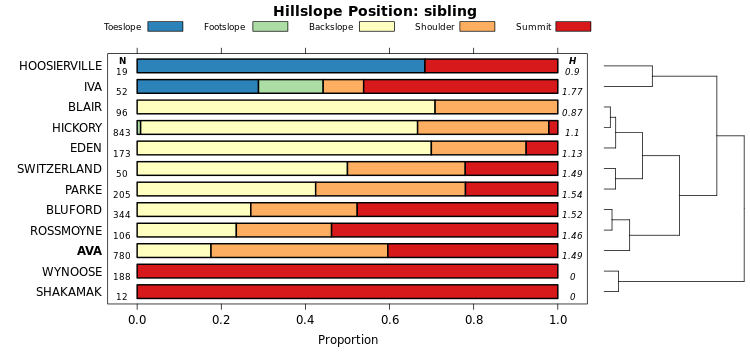

The following figures are related to some of the graphical summaries provided by SoilWeb/SDE. These related tutorials cover similar material in greater detail:

The fetchOSD function can return additional summaries tabulated from climate data, MLRA boundaries, SSURGO, and much more with the extended=TRUE argument. Lets experiment with distances computed from annual climate data and hillslope position.

# soil series from around CONUS

soils <- c('redding', 'pentz', 'willows', 'yolo', 'hanford', 'cecil', 'sycamore', 'KLAMATH', 'drummer', 'musick', 'zook')

s <- fetchOSD(soils, extended = TRUE)

# note additional data, packed into a list

str(s, 1)## List of 19

## $ SPC :Formal class 'SoilProfileCollection' [package "aqp"] with 8 slots

## $ competing :'data.frame': 57 obs. of 3 variables:

## $ geog_assoc_soils:'data.frame': 87 obs. of 2 variables:

## $ geomcomp :'data.frame': 9 obs. of 9 variables:

## $ hillpos :'data.frame': 10 obs. of 8 variables:

## $ mtnpos :'data.frame': 2 obs. of 9 variables:

## $ terrace :'data.frame': 9 obs. of 5 variables:

## $ flats :'data.frame': 7 obs. of 7 variables:

## $ shape_across :'data.frame': 11 obs. of 8 variables:

## $ shape_down :'data.frame': 11 obs. of 8 variables:

## $ pmkind :'data.frame': 19 obs. of 5 variables:

## $ pmorigin :'data.frame': 43 obs. of 5 variables:

## $ geomorphons :'data.frame': 11 obs. of 12 variables:

## $ mlra :'data.frame': 67 obs. of 4 variables:

## $ ecoclassid :'data.frame': 98 obs. of 5 variables:

## $ climate.annual :'data.frame': 88 obs. of 12 variables:

## $ climate.monthly :'data.frame': 264 obs. of 14 variables:

## $ NCCPI :'data.frame': 11 obs. of 16 variables:

## $ soilweb.metadata:'data.frame': 28 obs. of 2 variables:3.5.2.1 Annual Climate Data

The vizAnnualClimate function (sharpshootR package) arranges percentiles of annual climate summaries according to divisive hierarchical clustering applied to median values. Climate summaries were derived from 800m, daily PRISM data spanning 1981-2010.

# control color like this

trellis.par.set(plot.line=list(col='RoyalBlue'))

# control centers symbol and size here

res <- vizAnnualClimate(s$climate.annual, IQR.cex = 1.25, cex=1.1, pch=18)

# plot figure, this is Lattice graphics

print(res$fig)# do something with clustering

par(mar=c(0,0,1,0))

# usually requires tinkering...

plotProfileDendrogram(s$SPC, clust = res$clust, scaling.factor = 0.05, width = 0.3, y.offset = 1.3, name.style = 'center-center', cex.names = 0.75, hz.depths = TRUE, plot.depth.axis = FALSE, fixLabelCollisions = TRUE, hz.depths.offset = 0.05)

mtext('sorted by annual climate summaries', side = 3, at = 0.5, adj = 0, line = -1.5, font=3)3.5.2.2 Hillslope Position

The vizHillslopePosition function (sharpshootR package) arranges hillslope position proportions (SSURGO) according to divisive hierarchical clustering. Proportions are used as characteristics for each soil series. The branches of the dendrogram are rotated so that ordering within the figure approximates the hydrologic gradient as closely as possible. Rotation is performed by the dendextend::rotate function.

3.5.3 Pair-Wise Distances Between Subgroup-Level Taxa

The following are demonstrations of pair-wise distances computed from categorical data and the use of a dendrogram to organize groups from Soil Taxonomy. Click here for details.

# define a vector of series

s.list <- c('amador', 'redding', 'pentz', 'pardee', 'yolo', 'hanford', 'cecil', 'sycamore', 'KLAMATH', 'MOGLIA', 'drummer', 'musick', 'zook', 'argonaut', 'PALAU')

# get and SPC object with basic data on these series

s <- fetchOSD(s.list)

# graphical check

par(mar=c(0,0,2,1))

plotSPC(s, cex.names = 0.55, width = 0.3, name.style = 'center-center', shrink = TRUE, cex.depth.axis = 0.8)

title('Selected Pedons from Official Series Descriptions', line=0)# check structure of some site-level attributes

# head(site(s))[, c('id', 'soilorder', 'suborder', 'greatgroup', 'subgroup')])| id | soilorder | suborder | greatgroup | subgroup |

|---|---|---|---|---|

| AMADOR | inceptisols | xerepts | haploxerepts | typic haploxerepts |

| ARGONAUT | alfisols | xeralfs | haploxeralfs | mollic haploxeralfs |

| CECIL | ultisols | udults | kanhapludults | typic kanhapludults |

| DRUMMER | mollisols | aquolls | endoaquolls | typic endoaquolls |

| HANFORD | entisols | orthents | xerorthents | typic xerorthents |

| KLAMATH | mollisols | aquolls | cryaquolls | cumulic cryaquolls |

par(mar = c(0, 1, 1, 4))

# plot dendrogram + profiles

d <- SoilTaxonomyDendrogram(

s,

cex.taxon.labels = 0.75,

scaling.factor = 0.015,

width = 0.3,

cex.names = 0.55,

shrink = TRUE,

depth.axis = list(line = -3.25, cex = 0.75),

max.depth = 200

)Check the resulting distance matrix.

3.5.5 Clustering of Soil Colors

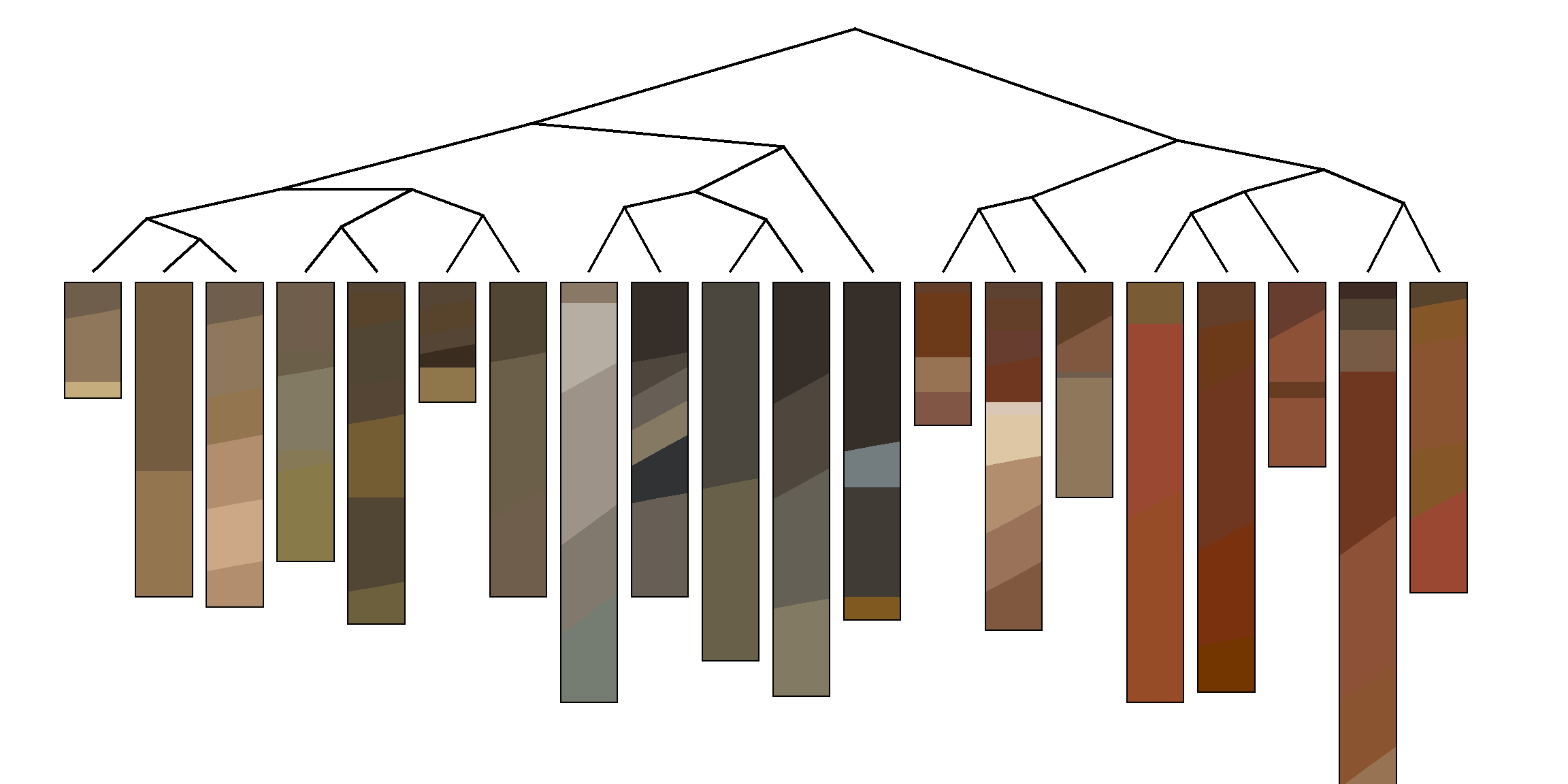

Just for fun, use hierarchical clustering and nMDS on soil color data from the OSDs that were used in the previous example.

# extract horizon data from select OSDs in above example

h <- horizons(s)

# convert Munsell color notation to sRGB

# these are moist colors

rgb.data <- munsell2rgb(h$hue, h$value, h$chroma, return_triplets = TRUE)

lab.data <- munsell2rgb(h$hue, h$value, h$chroma, returnLAB = TRUE)

# check

head(rgb.data)## r g b

## 1 0.4324796 0.3675617 0.29440648

## 2 0.5570066 0.4650572 0.35471027

## 3 0.5570066 0.4650572 0.35471027

## 4 0.7708844 0.6765034 0.48926139

## 5 0.3876272 0.2456471 0.16366245

## 6 0.4240408 0.2286641 0.09553195## L A B

## 1 40.91415 3.659798 13.21994

## 2 51.40327 4.926908 18.93657

## 3 51.40327 4.926908 18.93657

## 4 71.57199 1.917442 27.79933

## 5 30.26102 13.393855 19.32450

## 6 30.27183 19.090733 29.87009# remove NA

rgb.data <- na.omit(rgb.data)

lab.data <- na.omit(lab.data)

# retain unique colors

rgb.data <- unique(rgb.data)

lab.data <- unique(lab.data)

# visualize colors in LAB coordinates

pairs(lab.data, col='white', bg=rgb(rgb.data), pch=21, cex=2)# create distance matrix from LAB coordinates

d <- daisy(lab.data, stand=FALSE)

# divisive heirarcical clustering

d.hclust <- as.hclust(diana(d))

# convert to phylo class for nicer plotting

p <- as.phylo(d.hclust)

# perform nMDS on distance matrix

d.sammon <- sammon(d)

# setup multi-figure page

par(mfcol=c(1,2), mar=c(0,0,2,0), bg=grey(0.95))

# plot fan-style dendrogram

plot(p, font=2, cex=0.5, type='fan', show.tip.label=FALSE, main='Dendrogram Representation')

# add colors at dendrogram tips

tiplabels(pch=21, cex=4, col='white', bg=rgb(rgb.data))

# plot nMDS ordination

plot(d.sammon$points, type='n', axes=FALSE, xlab='', ylab='', asp=1, main='nMDS Ordination')

abline(h=0, v=0, col='black', lty=3)

points(d.sammon$points, bg=rgb(rgb.data), pch=21, cex=3.5, col='white')3.5.6 “How Do the Interpretations Compare?”

Example borrowed from this tutorial.

library(reshape2)

# set list of component names, same as soil color example

s.list <- c('amador', 'redding', 'pentz', 'willows', 'pardee', 'yolo',

'hanford', 'cecil', 'sycamore', 'zook')

# get OSD details

s <- fetchOSD(soils = s.list)

# set list of relevant interpretations

interp.list <- c('ENG - Construction Materials; Topsoil',

'ENG - Sewage Lagoons', 'ENG - Septic Tank Absorption Fields',

'ENG - Unpaved Local Roads and Streets')

# compose query

q <- paste0("SELECT UPPER(compname) as compname, mrulename, AVG(interplr) as interplr_mean

FROM component INNER JOIN cointerp ON component.cokey = cointerp.cokey

WHERE compname IN ", format_SQL_in_statement(s.list), "

AND seqnum = 0

AND mrulename IN ", format_SQL_in_statement(interp.list), "

AND interplr IS NOT NULL

GROUP BY compname, mrulename;")

# send query

x <- SDA_query(q)

# reshape long -> wide

x.wide <- dcast(x, compname ~ mrulename, value.var = 'interplr_mean')

kable_styling(knitr::kable(x.wide, digits = 2, caption="Mean Fuzzy Ratings for Select Soil Series"), font_size = 10)| compname | ENG - Construction Materials; Topsoil | ENG - Septic Tank Absorption Fields | ENG - Sewage Lagoons | ENG - Unpaved Local Roads and Streets |

|---|---|---|---|---|

| AMADOR | 0.00 | 1.00 | 1.00 | 0.70 |

| CECIL | 0.41 | 0.66 | 0.86 | 0.29 |

| HANFORD | 0.70 | 0.98 | 0.85 | 0.22 |

| PARDEE | 0.00 | 1.00 | 1.00 | 1.00 |

| PENTZ | 0.00 | 1.00 | 1.00 | 0.68 |

| REDDING | 0.02 | 1.00 | 1.00 | 0.80 |

| SYCAMORE | 0.82 | 1.00 | 0.76 | 0.78 |

| WILLOWS | 0.00 | 1.00 | 0.95 | 1.00 |

| YOLO | 0.85 | 0.90 | 0.61 | 0.61 |

| ZOOK | 0.00 | 1.00 | 1.00 | 1.00 |

# note: component name and series name have been converted to upper case

# sort rows of fuzzy ratings based on profiles from OSDs

new.order <- match(x.wide$compname, profile_id(s))

x.wide <- x.wide[new.order, ]

# copy ids to row.names so that they are preserved in distance matrix

row.names(x.wide) <- x.wide$compname

# create distance matrix

d <- daisy(x.wide[, -1])

# divisive hierarchical clustering

clust <- diana(d)par(mar=c(2,0,2,0))

plotProfileDendrogram(s, clust, dend.y.scale = 2, scaling.factor = 0.007, y.offset = 0.15, width=0.3, cex.names = 0.55, name.style = 'center-center', axis.line.offset = -3)

title('Component Similarity via Select Fuzzy Ratings')

mtext('Profile Sketches are from OSDs', 1)3.5.7 MLRA Concepts via Climate and Elevation Samples

Get and process example data, originally sampled from PRISM raster and DEM within MLRA 15, 18, 22A, and 22B. Variables include:

- elevation

- mean annual air temperature

- mean annuap precipitation

- fraction of annual precipitation as rain

- effective precipitation

- frost-free days

- growing degree days

library(MASS)

library(vegan)

library(cluster)

library(RColorBrewer)

# get example data

# init a temp file

tf <- tempfile()

# download compressed CSV to temp file

download.file('https://github.com/ncss-tech/stats_for_soil_survey/raw/master/data/clustering_and_ordination/MLRA-raster-data-example.csv.gz', destfile = tf, quiet = TRUE)

# read-in from compressed CSV to data.frame object

d.sub <- read.csv(gzfile(tf), stringsAsFactors = FALSE)

# check: note that the column names are quite strange

head(d.sub)

# set factor levels

mu.set <- c('15', '18', '22A', '22B')

d.sub$.id <- factor(d.sub$.id, levels = mu.set)

# define some nice colors

cols <- brewer.pal(9, 'Set1')

# remove light colors

cols <- cols[c(1:5,7,9)]3.5.7.1 Nonmetric Multidimensional Scaling

A smooh surface fit to mean annual air temperature highlights structure within a nMDS ordination.

m <- metaMDS(d.sub[, -c(1:3)], distance = 'gower')

# margins

par(mar=c(1,1,3,1))

# setup plot

o <- ordiplot(m, type='n', axes=FALSE)

# add points, colored accoring to MLRA

points(o, 'sites', cex=1, col=cols[as.numeric(d.sub$.id)], pch=16)

# overlay smooth surface of variable used in ordination

ordisurf(m, d.sub$Mean.Annual.Air.Temperature..degrees.C., add=TRUE, col='black', labcex=1)

legend('topleft', legend=mu.set, pch=16, col=cols, bty='n', cex=1.25)

title('nMDS with mean annual air temperature (deg C) surface')

box()3.5.7.2 Principle Coordinates

This example generates an ordination (via principal coordinates) of environmental variables (PRSIM and elevation) associated with MLRAs 15, 18, 22A, and 22B. Ellipses represent 50% probability contours via multivariate homogeneity of group dispersions. See comments in the code for details. Note that this example was extracted from the Region 2 Map Unit Comparison Report.

Thoughts on interpretation:

- The relative position of points and ellipses are meaningful; absolute position will vary each time the figure is generated.

- Look for “diffuse” vs. “concentrated” clusters: these suggest relatively broadly vs. narrowly defined concepts.

- Nesting of clusters (e.g. smaller cluster contained by larger cluster) suggests super-set/subset relationships.

- Overlap is proportional to similarity.

## NOTE: data with very low variability will cause warnings

# eval numerical distance, removing first 3 columns of IDs

d.dist <- daisy(d.sub[, -c(1:3)], stand=TRUE)

## map distance matrix to 2D space via principal coordinates

d.betadisper <- betadisper(d.dist, group=d.sub$.id, bias.adjust = TRUE, sqrt.dist = FALSE, type='median')

## fancy plot

plot(

d.betadisper, hull=FALSE, ellipse=TRUE, conf=0.5,

col=cols, main='Ordination of Raster Samples\n50% Probability Ellipse', sub='MLRA 15, 18, 22A, 22B'

)Pair-wise comparisons at the 90% level of confidence.

## pair-wise comparisons of variance

par(mar=c(4.5, 5.5, 4.5, 1))

plot(TukeyHSD(d.betadisper, conf.level = 0.9), las=1)This document is based on aqp version 2.3.1 and soilDB version 2.9.0 and sharpshootR version 2.4.1.